Technology that supports dish washing with kitchen robots

The aging society in Japan is expected to be characterized by an increase in households where housework represents a significant burden; for example, single-person households, or households with seniors requiring long-term care. By reducing the burden of cleaning up dishes after a meal, the recently developed technology will enable people to devote more time to caring for seniors or children, working, or other activities, thereby contributing to the support of housework and long-term care in an aging society. “Cleaning up dishes after a meal” can be divided into four main processes: transporting dishes (clearing the table); disposing of leftovers; washing dishes; and putting dishes away. The newly developed technology for handling dishes is an important elemental technology essential to all four of these processes. In addition to supporting dish washing, as presented here, this technology can be used to automate many other processes, including putting dishes away in cabinets. This new technology for handling dishes is comprised of two main technologies: (1) a real-time information feedback control technology for information obtained from multiple sensors; and (2) a manual search manipulation technology that uses MEMS touch sensors and an end effector with multiple types of sensors built in.

Outline of technology for handling dishes

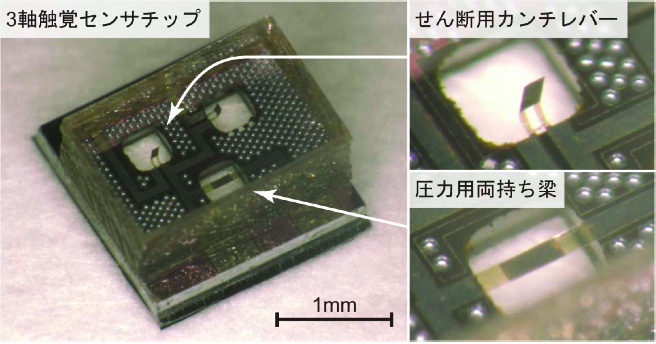

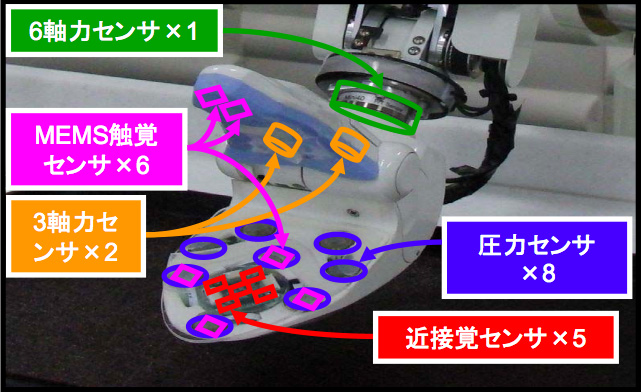

1. Real-time information feedback control technology for information obtained from multiple sensors In order to enable robots to handle dishes and other fragile objects without breaking them, those robots must be able to respond instantaneously to touch information sent from multiple types of sensors, and adjust its actions accordingly. One method of achieving a rapid response is a configuration method in which the robots are programmed to execute a specified action reflexively when a given piece of sensory information is obtained. Because the systems controlling the actions are frequently interchanged, however, it is difficult to describe the actions required to achieve high-level goals such as cleaning up dishes after a meal. In the case of actions with task goals like cleaning up dishes after a meal, the sensory information to be focused on is limited, so an effective method is to have a facilitator that controls the actions monitor the sensory information in parallel as required, and to gather information while making judgments on the actions to be executed. We have developed a software configuration method for accomplishing complex tasks using the following approach: Processes that requires fast, simple responses are processed in parallel with a separate thread; comprehensive judgments are made based on information with a wide scope of both time and space; and actions are planned accordingly.2. Manual search manipulation technology that uses MEMS touch sensors and an end effector with multiple types of sensors built in By using an end effector with multiple types of sensors built in, we have achieved a manipulation technology that handles dishes with “manual search” by effectively utilizing the unique characteristics of individual sensors. Before the robot touches the dishes, object surface information is obtained using a proximity sensor, and after the dishes are touched, strength information is obtained using pressure sensors and MEMS touch sensors. In the past, strength information was obtained using 6-axis sensors in the wrist, but there was significant error due to hand movement and the effects of weight, and this factor had a negative effect on accurate movements. MEMS touch sensors can measure strength in both vertical and shear directions, so it is possible to adjust the grip strength to control slipping of the gripped object and also adjust the pressing, thus achieving actions that trace the surface of the object. MEMS touch sensors are comprised of 2 cm x 2 cm ultra-compact MEMS 3-axis touch sensor chips embedded in a flexible rubber material. The University of Tokyo and Panasonic Corporation jointly developed MEMS touch sensors in which each individual sensor can measure weight with an accuracy of 0.3 g. These sensors won the “Most Outstanding Robot” award in the “This Year’s Robots” awards sponsored by the Japanese Ministry of Economy, Trade and Industry.

Reference:http://www.robotaward.jp/prize/01/ [in Japanese only]

(技術解説資料)

(1)多種センサ情報の実時間フィードバック制御技術

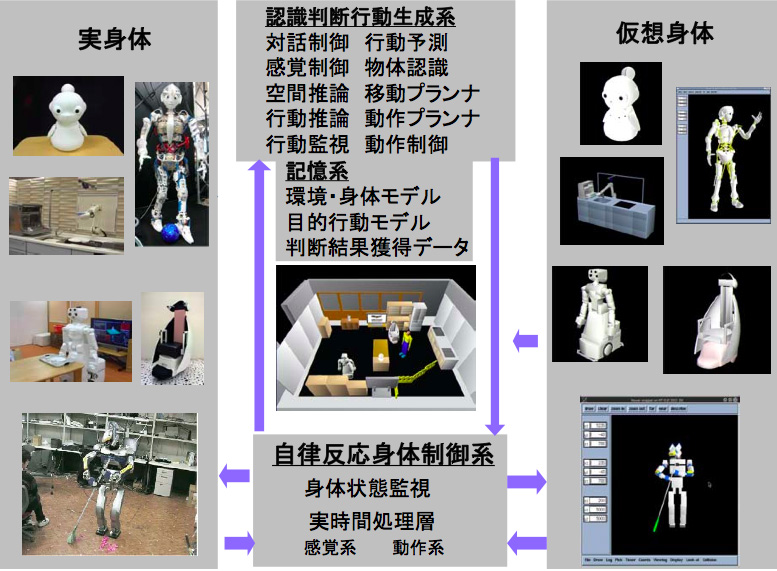

1-1) ロボットを記述し認識行動制御を行なうソフトウェア

1-1) ロボットを記述し認識行動制御を行なうソフトウェア多種のロボットの仮想身体形状,構造,感覚系,動作系を記述する仮想身体とそ の実身体の両方を共通に操作する高機能記述ソフトウェア環境.高機能ソフトウェ アと実時間制御システムとの連携機能を備えたIRT基盤ソフトウェア環境

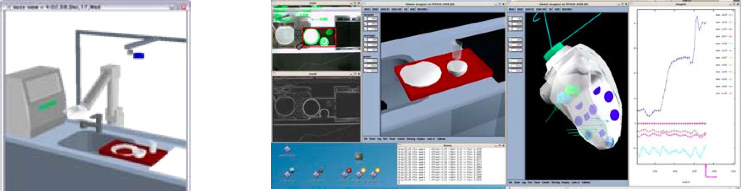

1-2) ロボット行動シミュレーション及びオンラインモニタリング環境

1-2) ロボット行動シミュレーション及びオンラインモニタリング環境手探りマニピュレーションにおける,動作シミュレーションと感覚情報を可視化し オンラインでモニタリングするシステム記述例

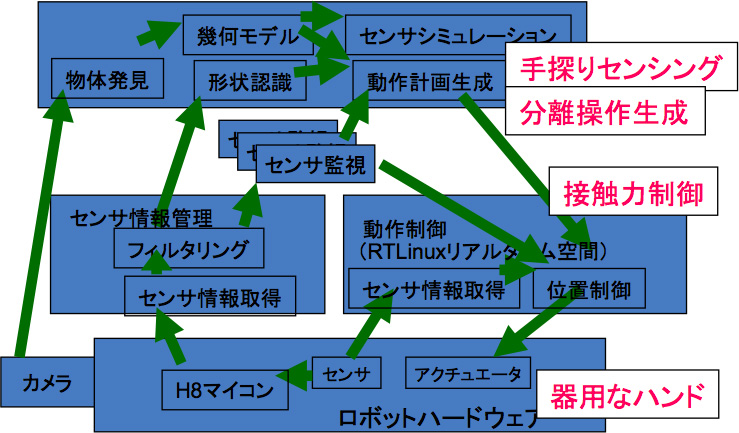

1-3) 多種センサ情報の実時間フィードバック制御のためのシステム構成

1-3) 多種センサ情報の実時間フィードバック制御のためのシステム構成メモリ自動管理機能を備えた環境・行動の三次元モデルを利用す る高レベル処理記述層と,ロボット身体上の多種センサ情報に基づ く感覚動作の実時間処理記述層を連携可能な形で統合し,高レベ ルの実時間フィードバック制御が可能

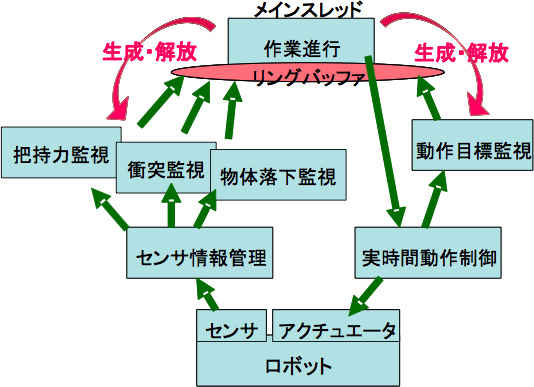

1-4) 動作実行中の並列監視処理スレッドの生成解放構造

1-4) 動作実行中の並列監視処理スレッドの生成解放構造行動シミュレーション,動作感 覚系オンラインモニタリング機能を備えたIRT基盤ソフトウェアにおける,動作 実行中にさまざまな監視機能を 必要に応じて生 成管理する機能

(技術解説資料)

(2)MEMS触覚センサ及び多種センサ埋込エンドエフェクタによる手探りマニピュレーション技術

2-1) MEMS触覚センサ

2-1) MEMS触覚センサ直立したピエゾ抵抗カンチレバーを柔軟ゴム材料に 埋めた超小型MEMS3軸触覚センサチップ

2-2) 多種センサ埋め込みエンドイフェクタ

2-2) 多種センサ埋め込みエンドイフェクタ

(技術解説資料)

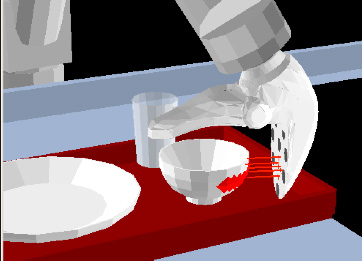

2-3) 標準食器の手探りマニピュレーション

食洗機用標準食器 : 透明なコップ,白い お椀と白い皿で、食洗機に何枚入るかを調べるための食器

透明ガラス、反射などのため視覚では食器のおおよその初期位置のみを認識し、 その後は手の感覚で手探りで確認をしながら食器を操作(マニピュレーション)する

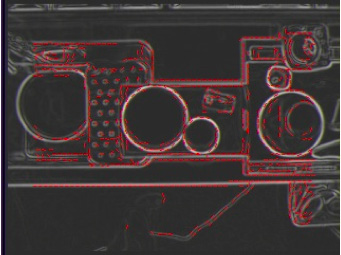

2-3-1) 画像による食器の発見、初期位置の認識

上から見たカメラ画像

境界線の局所方向検出画像

トレイ領域内の標準食器の候補を検出

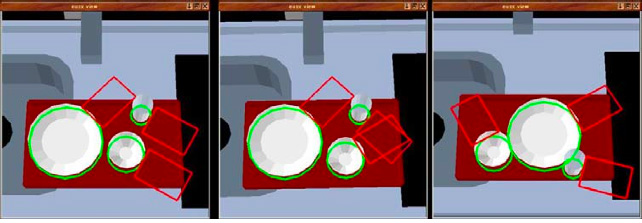

標準食器の幾何モデルの 初期配置予想認識結果

2-3-2) 食器の把持アプローチ方向の計画

食器を把持するために手を近づけることが可能な方向を見つける

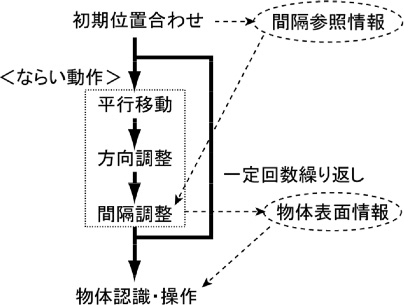

2-3-3) 近接センサによる食器の形状ならい

各近接覚センサの計測距離情報に応じて食器の表面に沿ってロボットを動 かし、食器表面形状情報を獲得

2-3-4) 食器の高さ確認、把持確認、設置確認

各動作における各種埋め込みセンサの利用: 接触アプローチ(左)、把持確認(中)、把持物体ごしの設置確認(右)

(技術解説資料)

2-3-5) 3種類の標準食器を食洗機へしまい、食洗機を操作する

食器配置認識

お椀を確認

お椀を把持

お椀をすすぐ

お椀を置く

次を見る

コップへ近づく

コップを置く

皿へ近づく

皿を持ち上げる

皿を置く

トレイを押す

扉を閉める

スイッチを押す

完了・待機